The post Top Free and Open-Source Speech Recognition Software appeared first on Top ITFirms - Result of In-depth Research & Analysis.

]]>This excerpt illustrates the need, features and more FAQ on the use and adaptability of speech recognition software solutions!

Table of Contents

- Why do we need voice recognition software?

- How reliable is voice recognition?

- How do you recognize a speech?

- Can we make speech recognition software do more than just typing?

- Are voice-text conversion software device-dependent?

- Which prevalent speech recognition programs are the best?

- List of best open source speech recognition software.

- Simon

- Kaldi

- CMUSphinx

- Mozilla

- Julius

- Dictation Bridge

- Mycroft

- Are there any disadvantages to open source voice recognition software?

- Conclusive

Speech recognition programs have branched out from computer science and computational linguistics developing methodologies to recognize verbal speech and translate it into text. As you speak the computer will recognize and type what you say. Therefore, you may use your voice to write your emails, documents, social media posts, and blog posts, giving you a chance to align your thoughts better. The main considerations of speech detecting software are Word error rate, Accuracy, Speed, ROC curves.

Why do we need voice recognition software?

This methodology can make your computer type what you want it and can correct grammatical mistakes, filter what you say and finally translate it into text.

How reliable is voice recognition?

Voice to text recognition software by Google came into being in 2017 with a 95% accuracy rate. That seemed impressive but it still assumes some significant gender and racial bias. It stills lags in recognizing a male or a female voice.

How do you recognize a speech?

The speech recognition software makes some effort to detect a voice and translate it into the text. It selects a waveform, splits it at utterances followed by silences, and tries recognizing what’s being said in each utterance. For doing that, it considers all possible combinations of words and tries matching them with the audio.

Can we make speech recognition software do more than just typing?

Voice detection and conversion software come pre-loaded with commands to help the user to open and close programs, make changes to settings, so that makes it eligible to do various things with your computer without even touching it.

Are speech to text conversion software device-dependent?

Speech recognition software does consume many computing resources. So you must use a powerful device with speed – probably Windows 10 and above with at least 2.6 GHz processing speed and at least 6 GB RAM.

Which prevalent speech recognition programs are the best?

This list is illustrative; we will be listing more subsequently:

- Siri

- Dragon Professional

- Dragon Anywhere

- Google Now

- Google Cloud Speech API

- Google Docs Voice Typing

- Amazon Lex

ITFirms suggests a list of best open source speech recognition software, as follows:

Simon

It is an open-source and free speech recognition software program to convert any supporting language or dialect to the text. It makes use of KDE libraries and can get coupled with CMU Sphinx and/or Julius with the HTK to run on Windows and Linux.

Kaldi

Kaldi is a speech recognition system to support linear transforms, MMI, boosted MMI and MCE discriminative training, deep neural networks, and feature-space discriminative training.

CMUSphinx

It makes use of mel-cepstrum MFCC features combined with noise tracking and spectral subtraction for noise reduction. Various types of MFCC differ by several parameters, but not really for accuracy.

Mozilla

These focus on DeepSearch, an automatic speech recognition engine aiming to make the speech recognition technology and trained models openly available to the developers. It utilizes a simple application programming interface for a deep-learning-based ASR engine.

Julius

It makes use of a huge vocabulary continuous speech recognition decoder software (LVCSR), based on word N-gram and context-dependent HMM to perform real-time decoding on various devices from microcomputers to cloud servers. It focuses on on-the-fly recognition for network input and microphone, CMM based input rejection, successive decoding, delimiting input by short pauses, N-best output, server mode and control API, confidence scoring, word graph output, forced alignment on word, control API and server mode.

Dictation Bridge

It is an add-on screen reader that serves as a gateway between the NVDA and JAWS screen readers and in between Windows Speech Recognition and Dragon Naturally Speaking. It has the potential to change how you work with computers using voice recognition.

Mycroft

It is a customizable solution, an open-source voice stack that is easily deployable. It can be easily implemented with any science project or global enterprise environment. It can convert speech into text, text to speech, intent parsing, modular design and interoperability, can do wake word spotting, keyword spotting through its precise wake word engine.

Are there any disadvantages to open source voice recognition software?

As with every program or an app, they are based on human computation, some algorithms, business logic, and Artificial Intelligence if that is applicable. The speech-to-text converter does come with some challenges:

- It might be a little less accurate

- This software can often misinterpret what is being said

- The development of this software might be time and cost consuming

- The accuracy and performance might not be perfect

- It becomes difficult sometimes to remove the background noise interference

- And many more physical side effects

Conclusive:

It is now easy to construct applications that require speech to text to speech capability. Such software finds immense usability in sending long emails, reading and editing long documents and minimize the typing part. You get an option to create such software for mobiles, web, or desktop. Looking to create speech recognition software solutions, discuss them with our team.

The post Top Free and Open-Source Speech Recognition Software appeared first on Top ITFirms - Result of In-depth Research & Analysis.

]]>The post Why did AI-Based Voice Assistants lower their orbits around Tech? appeared first on Top ITFirms - Result of In-depth Research & Analysis.

]]>Impact of Image and speech recognition, natural language dialogs, non-linear optimization, and real-time process control to moderate the future of AI and Voice Assistants!

AI potential is unbound and unseized. Various survey respondents including PwC, TechTrends, and Forbes have predicted that AI initiatives are expected to record growth in revenue and profits, customer experience and human decision making in the coming years. Business leaders expect AI to not only lower costs but also create new revenue in forthcoming years.

Implementing AI

Organizations need to make certain changes to their setups and workforce to accommodate AI-related changes, strategies for trust and data and converging AI with other technologies. Such plans might have far-reaching effects and if these plans are panned out successfully, many organizations will become AI-enhanced in the US – not just in pockets of the organization, but throughout their operations.

With machine learning, artificial intelligence, and deep learning being getting interwoven into daily lives in recent years, these have transformed several industries thus pushing ahead, building small blocks of value into a transformative whole.

Defining AI strategy, finding AI literate workers (engineers), training existing staff can be some sticking points with AI. And the answers to these questions often vary from one company to the next and the environment is continually evolving.

AI Work in Four Different Ways

Various ways in which AI work:

| Humans in Loop | No Humans in Loop | |

| Hardwired/Specific Systems | Assisted Intelligence

AI Systems that assist humans in making decisions. Examples are Hard-wired systems that do not learn from their interactions. |

Automation

Automation of manual and cognitive tasks that are either routine or non-routine. This does not involve new ways of doing things – automates existing tasks. |

| Adaptive Systems | Assisted Intelligence

AI systems that augment human decision making and continuously learn from their interactions with the human environment. |

Autonomous Intelligence

AI systems that can adapt to different situations and can act autonomously without assistance. |

Future Predictions for AI Implementation

Any technological breakthrough comes with a certain hype revolving around it. More businesses will launch initiatives involving AI in the coming years. Major predictions for the future of AI: (in no particular order)

- AI projects might fail due to a lack of focus on the aims and expectations of an AI initiative. Such projects are massive, expensive, trending towards plug and play, one size fits all, as service solutions or template approach to data science which may or may not be appropriate for every organization’s aims.

- The initiatives and projects are likely to succeed that is envisaged with a clear strategy from the start and have results tied to bottom-line KPI’s such as revenue growth and customer satisfaction scores.

In the context of the current blog, the way people interact will continue to shift towards the voice.

- Conversational interfaces will be more prominent in the same way as Echo and Alexa invaded through our homes when it comes to interacting with technology in a business environment.

- Voice-enabled interfaces are the most natural way of communication to existing point-and-click dashboards and systems. This as well helps structure any query in a matter of seconds.

- Computers are smart machines, which have adapted to human intelligence. This calls for less requirement to spend time learning their complicated mathematical language. Along with this, natural language generation and natural language processing algorithms have been tamed to better understand us and talk to us in the way we understand. We already have robots, working on similar mechanisms to converse within limited parameters just as we would with another human.

- Smart Voice assistants have changed searching and shopping experience online. As voice-enabled sensors create a hyper-personalized experience for users.

- Some of the companies that have become part of AI-based innovations: Yitu Technology, JD, MiningLamp, Megvii, Qihoo 360, PingAn, Tomorrow Advancing Life and Xiaomi.

- Research on the security risks of AI will be focused on five aspects:

- Algorithm security

- Data security

- Intellectual property rights

- Social employment

- Legal responsibility

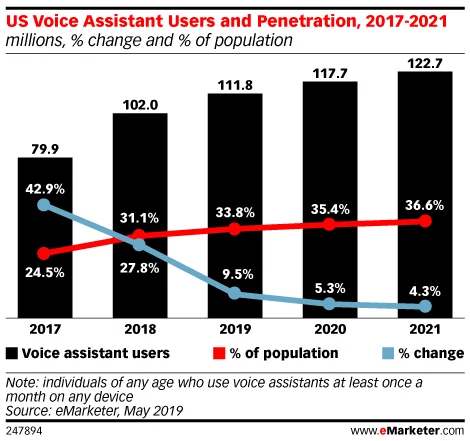

People making use of voice-controlled technology have increased manifold in the USA including Apple’s Siri, Samsung’s Bixby, Google Assistant, and Microsoft Cortana, etc. There will be an evident increase in the expected use of voice assistants among all age groups with an obvious increase in the number of internet and smartphone users. Most people use it as it’s fun, the spoken language feels more natural than typing, it’s easier for children to use and lets people use the device without their hands.

Besides, Google Assistant, Amazon Alexa, Microsoft’s Cortana, and Apple’s Siri many innovative voice-controlled devices available these days in market include: Vocca Pro Light, Honeywell Thermostats, Haiku Home Ceiling Fans, Moto Hint, Anova Precision Cooker, Radar Pace, Netgear Arlo Pro, Jibo, Hiku, Nest, Whirlpool, Belkin WeMo Light Switch and Smart Plug, Toymail.co, GreenIQ Smart Garden Hub, Ooma Telo, Garageio, iRobot Roomba, etc.

Out of several benefits, voice-enabled AI-based devices help in automating regular tasks like vacuuming, unplug appliances from wall sockets, regulate temperature, switch off the microwave and guesstimate the perfect juicy-ness of your steak Voice assistants not only save time, they further improve the quality of life. It is easy to use, can be used across various devices, provide responses to commands and make people so various tasks, giving driving directions, making phone calls, text messages, checking the weather or playing music, adjusting AC temperature, Changing channels on television, increasing/lowering sound or brightness, setting alarms, telling jokes, flipping a coin, telling a story, voice-based search on search engine, booking travel tickets and even personal banking.

Image Source: https://bit.ly/33cTQB4

As the brands strive to expand voice interactions beyond transactional use cases to more conversational and complex engagements, designers (more than the developers) will be playing a critical role in making voice experiences as user-friendly and intuitive as the touchscreens these days.

Should voice technology assistants be human-like? Not necessarily

Many brands strive hard to design voice assistants/virtual assistants that can have a human-like conversation and make the customer care process as natural as possible. But the views are still split as most of the consumers prefer talking to robots and some don’t. Some robots often fail to understand what is being asked, might not recognize the voice at times.

This often calls for the need to have designers who follow unique approaches. For this, they often need to take a discerning look at the needs of the users. Brands need to prioritize creating voice assistants and experiences that are easy to use and intuitive. With this, the context of use that ultimately drives natural-feeling interactions and greater adoption and comfort with this emerging medium. e.g.: voice-powered refrigerators, microwaves probably don’t need to have human attributes but a conversational GPS that could be beneficial.

The World Artificial Intelligence Conference 2019 in Shanghai, described Artificial Intelligence with intelligent connectivity and infinite possibilities.

Major visionaries across the globe have converged into insightful discussions that have piqued everyone’s imagination on the future of the AI world. Examples of major tech innovations in AI assistants:

- Shanghai-based AI Unicorn Company – DeepBlue has created (1) a third-generation road-sweeper backed by 5G technology and (2) AI autonomous driving as well as a 5G bus named Panda.

- Chinese tech giant Tencent unveiled the debut of its self-developed intelligent inspection and manipulation robot that was equipped with 5G-enabled real-time remote robot teleoperation technology and AI algorithms. It can be helpful in hazardous industrial scenarios like chemical plants, mining firms.

Collective Agreement

AI can transform nearly everything about the business and markets. App development companies can bank upon this opportunity as it is one of the good reasons to take action. If done correctly developing an AI model for one specific task can enhance an existing process or solve a well-defined business problem, while simultaneously creating the potential to scale to other parts of the enterprise.

Voice interactions are transactional and straightforward and users will be using it for more complex activities like booking a medical appointment, requesting hotel amenities, grocery delivery, etc. Top AI companies can simplify such interactions by combining voice with the screen. Interactions can be improved if a voice interface is intentionally designed keeping first-hand knowledge of voice-enabled products to become experts on the UX of the apps.

The post Why did AI-Based Voice Assistants lower their orbits around Tech? appeared first on Top ITFirms - Result of In-depth Research & Analysis.

]]>